“Det er jo bare sådan de ser sig selv”. ”Andre siger måske noget andet”. ”De siger bare, hvad de tror, du vil høre.” Lyder det velkendt? Dette er typiske holdninger til selvbeskrivelsestest, men de afspejler rent faktisk de myter og misforståelser, som vil blive belyst i nedenstående artikel.

Vi har lært meget om værdien af selvbeskrivelsestest i de to år, vi brugte på at udvikle vores markedsførende personlighedstest, Wave®. På baggrund af de erfaringer vi gjorde, vil vi dele nogle praktiske tips til, hvordan man sikrer, at data fra selvbeskrivelser, herunder også interviews, bliver anvendt så effektivt som muligt.

Surprising Truth: You can’t escape self-report

Interviews are self-report. CVs are self-report. Application forms are self-report. Yet all of these tools are used widely across the world to help recruiters make decisions about whether or not to hire someone. Imagine not using them; how else would you do it?

Aptitude tests are not self-report and are highly effective screeners (when it comes to measuring how effective different types of tools are at predicting job performance, aptitude tests come up trumps), however, they don’t get at how a person behaves. This is where we need to pull in personality assessments, (highly structured) interviews or observational techniques such as role plays and group exercises at an assessment center. The latter, though, can be riddled with problems.

Surprising Truth: Self-report is better than observing someone at assessment center

There is strong evidence that interviews, when done properly, do a very good job of predicting workplace performance. The same can be said for personality questionnaires. In fact, the evidence suggests that both of these things are better at predicting job performance than observational techniques that are used as assessment center exercises.

There are several reasons for this:

- A lot of the time, assessment center exercises simply aren’t getting at things that are relevant to the role; evidence suggests that work samples or trialing the job (Job Tryout) are both better at predicting performance.

- More recent research looking at assessment centers also suggests that their exercises don’t actually measure competencies and so they can’t tell you what an individual is good or bad at; they tell you how good or bad they might be at performing that particular task, which will only be predictive of workplace performance if that task is highly relevant to a major part of the job and therefore more akin to a work sample test (generally they are not!).

- And finally, with many assessment center exercises (particularly group exercises and role plays), there are just too many extraneous variables feeding in to provide a truly accurate picture; here are just some examples:

- In group exercises, so much depends on how other people in the group behave.

- In role plays, however clear your scripts, there will be variability in actor style and performance.

- Some people might have experience or job knowledge that gives them an advantage in any particular exercise; others might lack that experience or job knowledge and get marked down, even if they have the underlying competency.

- Assessment centers usually have multiple observers covering a number of candidates; even with the best training and calibration in the world, there will still be variability in observers’ notetaking, scoring and final assessment.

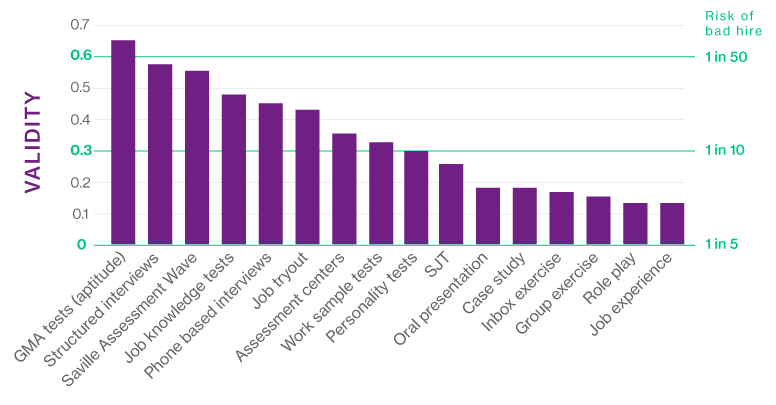

The graph below summarizes how effective different assessment methodologies are at predicting workplace performance. As we have already mentioned, aptitude tests lead the field. Structured interviews and Wave follow close behind.

Assessment center exercises in comparison do very poorly and struggle to even reach the industry standard of a 0.3 validity. A tool’s validity determines its effectiveness; the lower the validity score, the greater the risk of making the wrong selection decision and hiring a poor performer.

With no validity (a score of 0), the chances of making a poor hire are 1/5. Bring in an assessment which meets the industry standard for validity (0.3) and you reduce this to 1/10. But use a tool with a validity score of 0.6 and you reduce this to 1/50.

The relationship between validity and getting the right people, rather than the wrong people, is exponential. To maximize your hit rate, you have to climb up the validity graph and use the assessment methods proven to be most effective.

Effectiveness of assessment methods*

*Includes all assessment methods generally deemed acceptable for use in hiring across different occupations

Surprising Truth: You Can Improve the Effectiveness of Your Assessment

We are often asked why Wave also has such strong validity. The answer is actually very simple; it is all in the questions. One of the main lessons we took from developing Wave is that exact wording of questions is critical and small differences really do matter; the slightest change can impact on a question’s ability to predict performance.

There are 216 questions in the Wave Professional Styles questionnaire. We started with over 4000 questions, carefully crafted by a team of four highly-skilled psychometricians, who between them had over 100 years’ experience. We selected the very best of those questions based on how well they targeted the key behavior and then used data to identify those most effective at predicting performance.

Have a look at the list of questions below and decide whether you think they are ‘good’ or ‘bad’ in terms of effectiveness at predicting performance at work. They would usually be presented in a way that meant you had to state the extent to which you agreed or disagreed with each item.

Click on the item below to reveal the answers.

1. I am someone who has lots of random ideas

Bad item. The problem here is the word random. Random ideas are often going to be irrelevant and so the question isn’t going to do particularly well at telling you how effective someone is likely to be at creating innovation at work. Simply removing the word random makes this a much stronger item.

2. I am very concerned about how others are feeling

Bad item. The problem here is that it isn’t an active behavior. You might have concern but not actually do anything about it. A better item is “I show empathy for others”.

3. I am very bold in what I do

Bad item. This is problematic because the word bold has multiple meanings; in England it means courageous, however in Ireland it means naughty. As soon as you bring in ambiguity, you lose effectiveness.

4. I rarely make mistakes

Bad item. This is one you could disagree with because you believe you never make mistakes, when in disagreeing to it, the questionnaire assumes you always make mistakes. This is bringing in complexity and adding to the cognitive load of the assessment, which means you end up measuring cognitive skills rather than behavior.

5. I am not easily difficult to convince

Bad item. Double negatives again significantly increase cognitive load. Simplicity is key.

6. I do things by gut feel

Bad item. This one has translation issues and in some languages is translated to roughly “I do things by feeling my stomach”.

7. I am quiet and reserved

Bad item. We want to avoid ‘and’ because this means what you are getting at is much less targeted. It is also possible to be quiet but not reserved and reserved but not quiet.

Article written by Hannah Mullaney.

April, 2020